If you've perused this blog, you know that I love probability. I was fortunate enough to see Al Cuoco and Alicia Chiasson give a really cool presentation at this year's NCTM conference about exploring the probabilities of dice sums geometrically and algebraically. Wheelhouse. After we got done looking at some student work and pictures of distributions, Al nonchalantly threw out the following question:

Is it possible to change the integer labels on two dice [from the standard 1,2,3,4,5,6] such that the distribution of sums remains unchanged?

Of course he was much cooler than that. I've significantly nerded up the language for the sake of brevity and clarity. Still, good question, right? And of course since our teacher has posed this tantalizing challenge, we know that the answer is yes, and now it's up to us to fill in the details. Thusly:

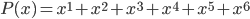

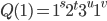

First let's make use of the Cuoco/Chiasson observation that we can represent the throw of a standard die with the polynomial

When we do it this way, the exponents represent the label values for each face, and the coefficients represent frequencies of each label landing face up (relative to the total sample space). This is neither surprising, nor super helpful. Each "sum" occurs once out of the six possible. We knew this already.

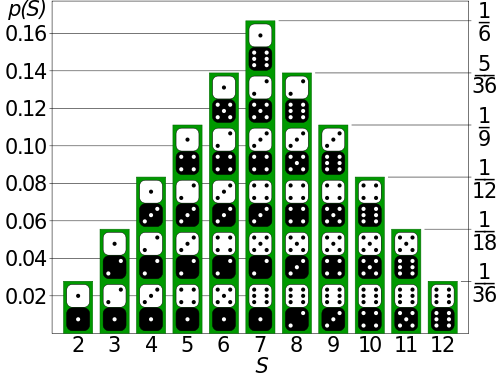

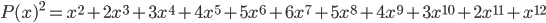

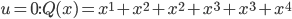

What is super helpful is that we can include n dice in our toss by expanding n factors of P(x). For two dice (the number in question), that looks like

You can easily confirm that this jibes with the standard diagram. For instance the sum of 7 shows up most often (6 out of 36 times), which helps casinos make great heaps of money off of bettors on the come. Take a moment. Compare.

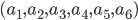

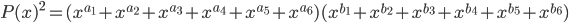

Okay, so now we know that the standard labels yield the standard distribution of sums. The question, though, is whether there are any other labels that do so as well. Here's where some abstract algebra comes in handy. Let's assume that there are, in fact, dice out there who satisfy this property. We can represent those with polynomials as well. We know that the coefficient on each term must still be 1 (each face will still come up 1 out of 6 times), but we don't yet know about the exponents (labels). So let's say the labels on the two dice are, respectively

If we want the same exact sum distribution, it had better be true that

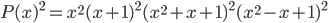

For future convenience (trust me), let's call the first polynomial factor on the right hand side Q(x). Great! Now we just have to figure out what all the a's and b's are. It helps that our polynomials belong to the ring Z[x], which is a unique factorization domain. A little factoring practice will show us that

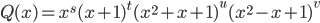

We just have to rearrange these irreducible factors to get the answer we're looking for. Due to a theorem that is too long and frightening to reproduce here [waves hands frantically], we know that the unique factorization of Q(x)---our polynomial with unknown exponents---must be of the form

where s, t, u, and v are all either 0, 1, or 2. So that's good news, not too many possibilities to check. In fact, we can make our lives a little easier. First of all, notice that Q(1) must equal 6. Right? Each throw of that single die must yield each of the 6 faces with equal probability. But then substituting 1 into the factored form gives us

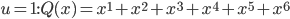

Clearly this means that t and u have to be 1, and we just have to nail down s and v. Well, if we take a look at Q(0), we also quickly realize that s can't be 0. It can't be 2 either, because, if s is 2, then the smallest sum we could obtain on our dice would be 3---which is absolutely no good at all. So s is 1 as well. Let's see what happens in our three remaining cases, when u is 0, 1, and 2:

Check out those strange and beautiful labels! We can mark up the first die with the exponents from the u = 0 case, and the second die with the u = 2 case. When we multiply those two polynomials together we get back P(x)2, which is precisely what we needed (check if you like)! Our other option, of course, is to label two dice with the u =1 case, which corresponds to a standard die. And, thanks to unique factorization, we can be sure that there are no other cases. Not only have we found some different labels, we've found all of them!

If the a's on the first die are (1,2,2,3,3,4), then the b's end up being (1,3,4,5,6,8), and vice versa. And, comfortingly, if the a's on the first die are (1,2,3,4,5,6), then so are the b's on the second one.

Two dice with the u = 1 label are what you find at every craps table in the country. One die of each of the other labels forms a pair of Sicherman dice, and they are the only other dice that yield the same sum distribution. You could drop Sicherman dice in the middle of Vegas, and nobody would notice. At least in terms of money changing hands. The pit boss might take exception. Come to think of it, I cannot stress how important it is that you not attempt to switch out dice in Vegas. Your spine is also uniquely factorable...into irreducible vertebrae.

*This whole proof has been cribbed from Contemporary Abstract Algebra (2nd ed.), by Joseph A. Gallian. If you want the whole citation, click his name and scroll down.*