For just a moment, let's consider a staple of the second year algebra curriculum: the one-dimensional projectile motion problem. (I used to do an awful lot of this sort of thing.) It's not a fantastic problem---it's overdone, and often under-well---but it's representative of many of our standard modeling problems in some important ways:

- Every one of my students has participated in the activity we're modeling. They've thrown, dropped, and shot things. They've jumped and fallen and dove from various heights. In other words, they have a passing acquaintance with gravity.

- Data points are relatively easy to come by. All we need is a stopwatch and a projectile-worthy object. If that's impractical, then there are also some great and simple---and free---simulations out there (PhET, Angry Birds), and some great and simple---and free---data collection software as well (Tracker).

- We only need a few data points to fix the parameters. For a general quadratic model, we only need three data points to determine the particular solution. Really we only need two, if we assume constant acceleration.

- Experiments are easy to repeat. Drop/throw/shoot the ball again. Run the applet again.

- The model conforms to a fairly nice and well-behaved family of functions. Quadratics are continuous and differentiable and smooth, and they're generally willing to submit to whatever mathematical poking we're wont to visit upon them without getting gnarly.

- Theoretical predictions are readily checked. Want to know, for instance, when our projectile will hit the ground? Find the sensible zero of the function (it's pretty easy to sanity check its reasonableness---see #1 above). Look at a table of values and step through the motion second-by-second (use a smaller delta t for an even better sense of what's going on). Click RUN on your simulation, and wait until it stops (self-explanatory). And, if you're completely dedicated, build yourself a cannon and put your money where your mouth is.

Of course I've chosen to introduce this discussion with the example of projectile motion, but there are plenty of other candidates: length/area/volume, exponential growth and decay, linear speed and distance. Almost without exception (in the algebra classroom), we model phenomena that satisfy the six conditions listed above.

Almost. Because then we run into probability, and probability isn't so tame. I'll grant that #1 still holds (though I'm not entirely convinced it holds in the same sense), but the other five conditions go out the window.

Data points are NOT easy to come by.

I can already hear you protesting. "Flip a coin...that's a data point!" Well, yes. Sort of. But in the realm of probability, individual data points are ambiguous. The ordered pair (3rd flip, heads) is very different from (3 seconds, 12 meters). They're both measurements, but the first one has much, much higher entropy. Interpretation becomes problematic. Here's another example: My meteorologist's incredibly sophisticated model (dart board?) made the following prediction yesterday: P(rain) = 0.6. In other words, the event "rain" was more likely than the event "not rain." It did not rain yesterday. How am I to understand this un-rain? Was the model right? If so, then I'm not terribly surprised it didn't rain. Was the model wrong? If so, then I'm not terribly surprised it didn't rain. In what sense have I collected "data?"

And what if I'm interested in a compound event? What if I want to know not just the result of a lone flip, but P(exactly 352 heads in 1000 flips)? Now a single data point suddenly consists of 1000 trials. So it turns out data points have the potential to be rather difficult to come by, which brings us to...

We need an awful lot of data points.

I'm not talking about our 1000-flip trials here, which was just a result of my arbitrary choice of one particular problem. I mean that, no matter what our trials consist of, we need to do a whole bunch of them in order to build a reliable model. Two measurements in my projectile problem determine a unique curve and, in effect, answer any question I might want to ask. Two measurements in a probabilistic setting tell me just about nothing.

Consider this historical problem born, like many probability problems, from gambling. On each turn, a player rolls three dice and wins or loses money based on the sum (fill in your own details if you want; they're not so important for our purposes here). As savvy and degenerate gamblers, we'd like to know which sums are more or less likely. We have some nascent theoretical ideas, but we'd like to test one in particular. Is the probability of rolling a sum of 9 equal to the probability of rolling a sum of 10? It seems it should be: after all, there are six ways to roll a 9 ({6,2,1},{5,3,1},{5,2,2},{4,4,1},{4,3,2},{3,3,3}), and six ways to roll a 10 ({6,3,1},{6,2,2},{5,4,1},{5,3,2},{4,4,2},{4,3,3})*. Done, right?

It turns out this isn't quite accurate. For instance, the combination {6,2,1} treats all of the 3! = 6 permutations of those numbers as one event, which is bad mojo. If you go through all 216 possibilities, you'll find that there are actually 27 ways to roll a 10, and only 25 ways to roll a 9, so the probabilities are in fact unequal. Okay, no biggie, our experiment will certainly show this bias, right? Well, it will, but if we want to be 95% experimentally certain that 10 is more likely, then we'll have to run through about 7,600 trials! (For a derivation of this number---and a generally more expansive account---see Michael Lugo's blog post.) In other words, the Law of Large Numbers is certainly our friend in determining probabilities experimentally, but it requires, you know, large numbers.

*If you've ever taught probability, you know that this type of dice-sense is rampant. Students consistently collapse distinct events based on superficial equivalence rather than true frequency. Ask a room of high school students this question: "You flip a coin twice. What's the probability of getting exactly one head?" A significant number will say 1/3. After all, there are three possibilities: no heads, one head, two heads. Relatively few will immediately notice, without guidance, that "one head" is twice as likely as the other two outcomes.

Experiments are NOT easy to repeat.

I've already covered some of the practical issues here in terms of needing a lot of data points. But beyond all that, there are also philosophical difficulties. Normally, in science, when we talk about repeating experiments, we tend to use the word "reproduce." Because that's exactly what we expect/are hoping for, right? I conduct an experiment. I get a result. I (or someone else) conduct the experiment again. I (they) get roughly the same result. Depending on how we define our probability experiment, that might not be the case. I flip a coin 10 times and count 3 heads. You flip a coin 10 times and count 6 heads. Experimental results that differ by 100% are not generally awesome in science. In probability, they are the norm.

As an interesting, though somewhat tangential observation, note that there is another strange philosophical issue at play here. Not only can events be difficult to repeat, but sometimes they are fundamentally unrepeatable. Go back to my meteorologist's prediction for a moment. How do I repeat the experiment of "live through yesterday and see whether it rains?" And what does a 60% chance of rain even mean? To a high school student (teacher) who deals almost exclusively in frequentist interpretations of probability, it means something like, "If we could experience yesterday one million times, about 600,000 of those experiences would include rain." Which sounds borderline crazy. And the Bayesian degree-of-belief interpretation isn't much more comforting: "I believe, with 60% intensity, that it will rain today." How can we justify that level of belief without being able to test its reliability by being repeatedly correct? Discuss.

Probability distributions can be unwieldy.

Discrete distributions are conceptually easy, but cumbersome. Continuous distributions are beautiful for modeling, but practically impossible for prior-to-calculus students (not just pre-calculus ones). Even with the ubiquitous normal distribution, there is an awful lot of hand-waving going on in my classroom. Distributions can make polynomials look like first-grade stuff.

Theoretical predictions aren't so easily checked.

My theoretical calculations for the cereal box problem tell me that, on average, I expect to buy between 5 and 6 boxes to collect all the prizes. But sometimes when I actually run through the experiment, it takes me northward of 20 boxes! This is a teacher's nightmare. We've done everything right, and then suddenly our results are off by a factor of 4. Have we confirmed our theory? Have we busted it? Neither? Blurg. So what are we to do?

We are to build a probability cannon!

With projectile motion problems, building a cannon is nice. It's cool. We get to launch things, which is awesome. With probability, I submit that it's a necessity. We need to generate data: it's the raw material from which conjecture is built, and the touchstone by which theory is tested. We need to (metaphorically) shoot some stuff and see where it lands. We need...simulations!

If your model converges quickly, then hand out some dice/coins/spinners. If it doesn't, teach your students how to use their calculators for something besides screwing up order of operations. Better yet, teach them how to tell a computer to do something instead of just watching/listening to it. (Python is free. If you own a Mac, you already have it.) Impress them with your wizardry by programming, right in front of their eyes, and with only a few lines of code, dice/coins/spinners that can be rolled/flipped/spun millions of times with the push of a button. Create your own freaking distributions with lovely, computer-generated histograms from your millions of trials. Make theories. Test theories. Experience anomalous results. See that they are anomalous. Bend the LLN to your will.

Exempli Gratia

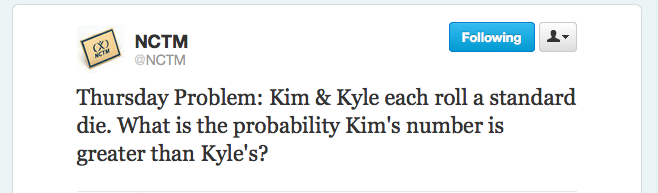

NCTM was kind enough to tweet the following problem today, as I was in the middle of writing this post:

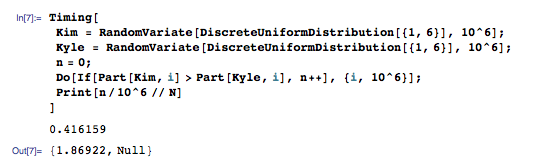

Okay, maybe the probability is just 1/2. I mean, any argument I make for Kim must be symmetrically true for Kyle, right? But wait, it says "greater than" and not "greater than or equal to," so maybe that changes things. Kim's number will be different from Kyle's most of the time, and it will be greater half of the times it's different, so...slightly less than 1/2? Or maybe I should break it down into mutually exclusive cases of {Kim rolls 1, Kim rolls 2, ... , Kim rolls 6}. You know what, let's build a cannon. Here it is, in Mathematica:

Okay, maybe the probability is just 1/2. I mean, any argument I make for Kim must be symmetrically true for Kyle, right? But wait, it says "greater than" and not "greater than or equal to," so maybe that changes things. Kim's number will be different from Kyle's most of the time, and it will be greater half of the times it's different, so...slightly less than 1/2? Or maybe I should break it down into mutually exclusive cases of {Kim rolls 1, Kim rolls 2, ... , Kim rolls 6}. You know what, let's build a cannon. Here it is, in Mathematica:

Okay, so it looks like my second conjecture is right; the probability is a little less than 1/2. Blammo! And it only took (after a few seconds of typing the code) 1.87 seconds to do a million trials. Double blammo! But how much less than 1/2? Emboldened by my cannon results, I can turn back to the theory. Now, if Kyle rolls a one, Kim will roll a not-one with probability 5/6. Ditto two, three, four, five, and six. So Kim's number is different from Kyle's 5/6 of the time. And---back to my symmetry argument---there should be no reason for us to believe one or the other person will roll a bigger number, so Kim's number is larger 1/2 of 5/6 of the time, which is 5/12 of the time. Does that work? Well, since 5/12 ≈ 0.4167, which is convincingly close to 0.416159, I should say that it does. Triple blammo and checkmate!

Okay, so it looks like my second conjecture is right; the probability is a little less than 1/2. Blammo! And it only took (after a few seconds of typing the code) 1.87 seconds to do a million trials. Double blammo! But how much less than 1/2? Emboldened by my cannon results, I can turn back to the theory. Now, if Kyle rolls a one, Kim will roll a not-one with probability 5/6. Ditto two, three, four, five, and six. So Kim's number is different from Kyle's 5/6 of the time. And---back to my symmetry argument---there should be no reason for us to believe one or the other person will roll a bigger number, so Kim's number is larger 1/2 of 5/6 of the time, which is 5/12 of the time. Does that work? Well, since 5/12 ≈ 0.4167, which is convincingly close to 0.416159, I should say that it does. Triple blammo and checkmate!

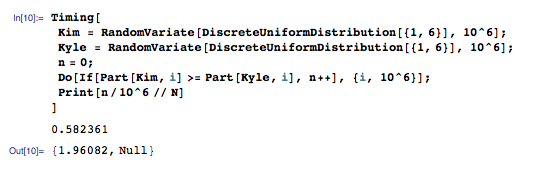

But we don't have to stop there. What if I remove the condition that Kim's number is strictly greater? What's the probability her number is greater than or equal to Kyle's? Now my original appeal to symmetry doesn't require any qualification. The probability ought simply be 1/2. So...

What what? Why is the probability greater than 1/2 now? Oh, right. Kim's roll will be equal to Kyle's 1/6 of the time, and we already know it's strictly greater than Kyle's 5/12 of the time. Since those two outcomes are mutually exclusive, we can just add the probabilities, and 1/6 + 5/12 = 7/12, which is about (yup yup) 0.583. Not too shabby.

What what? Why is the probability greater than 1/2 now? Oh, right. Kim's roll will be equal to Kyle's 1/6 of the time, and we already know it's strictly greater than Kyle's 5/12 of the time. Since those two outcomes are mutually exclusive, we can just add the probabilities, and 1/6 + 5/12 = 7/12, which is about (yup yup) 0.583. Not too shabby.

What if we add another person into the mix? We'll let Kevin join in the fun, too. What's the probability that Kim's number will be greater than both Kyle's and Kevin's?

It looks like the probability of Kim's number being greater than both of her friends' might just be about 1/4. Why? I leave it as an exercise to the reader.

It looks like the probability of Kim's number being greater than both of her friends' might just be about 1/4. Why? I leave it as an exercise to the reader.

That tweet-sized problem easily becomes an entire lesson with the help of a relatively simple probability cannon. If that's not an argument for introducing them into your classroom, I don't know what is.

Ready. Aim. Fire!

Thanks to Christopher Danielson for sparking this whole discussion.

Fabulous. I dig the metaphor.

I would add that a certain amount of work has to be done so that students develop a bit of trust in the probability cannon. They need lots of experience seeing that the probability cannon correctly models the real world. In the example you cite, I'd like to see the game actually played several dozen times-enough to get a sense that it's not 1/2, before firing the cannon. Or I would need to have used the cannon enough times on other problems to know that it's not rigged and to be able to instinctively trust the results.

Plus, cannon construction is far from idiotproof. For a moderately complex probability question, there are plenty of mistakes one can make along the way.

You have done an excellent job of laying out important differences between studying probability and studying other real-world phenomena mathematically.

By the way, what does your cannon say about cereal boxes? And what does it say about the weather?

You're absolutely right about student experience. In no way am I suggesting that we should jump straight to the Mathematica cannon. I'm simply suggesting that there needs to be some cannon involved. Probability is the only topic that comes to mind where students won't default to experimentation in any form if left to their own devices. If I ask them about area of triangles, they'll draw some triangles; if I ask them about exponential growth, they'll toy with some numbers; if I ask them about the expected value of cereal box purchases, it will never occur to them to do anything but theoretical work. That needs to change. I need them to feel like some real-world numbers are within their reach for conjecturing. I want them to think, "Hey, we should try this out a little first!"

Low-tech versions of the cannon will work just fine. In fact, if I were really doing this problem in class, I would absolutely pair them up and hand out some dice. Or let them model it with their calculators...or whatever else they could think of. I like the Mathematica example because it's so simple and powerful. When I do a little on-the-spot programming in class, they're always amazed that (a) I learned how to do it, and (b) it's not so hard! They can learn, too. For free. And for most models, they can use any programming language that can generate some pseudorandom integers and run a FOR loop---which is to say any programming language they want. It's certainly not idiot-proof, and there are plenty of mistakes to be made, but isn't that a hallmark of a worthwhile endeavor?

I will make one pitch for the high-tech, high-powered cannon. There are some problems that are just fundamentally out of range of the low-tech guns. If I want to ask, for example, about the probability of being dealt four-of-a-kind in poker, it's going to take several class periods of doing nothing but dealing cards to see even a single instance. If you run 2,000 trials and see zero successes, that doesn't give you much to work with. In fact, the probability is 1/4165. That means that if I deal 4,165 hands, there's still better than a 1/3 chance that I won't see a single of four-of-a-kind (actually a 1/e chance)! That's definitely a job for the heavy artillery.

I think that when I teach probability in the future, I'm going to approach it like some of the physics teachers I've been reading about: split up the class into "experimental" and "theoretical" groups and have them present complementary solutions. I like that idea a lot, and I think it fits well with the spirit of probability.

Your next book should be titled "The Probability Can(n)on".

By the way, what does your cannon say about MegaMillions? Should I buy a ticket?